That nod of the head or batting of the eyes can go a long way toward signalling what you want from someone else, like that cup of coffee a friend is cradling in his hands.

Although machines aren't so attune to those subtle gestures, University of B.C. researchers have developed programming to make robots much more perceptive to those all-so-human nuances.

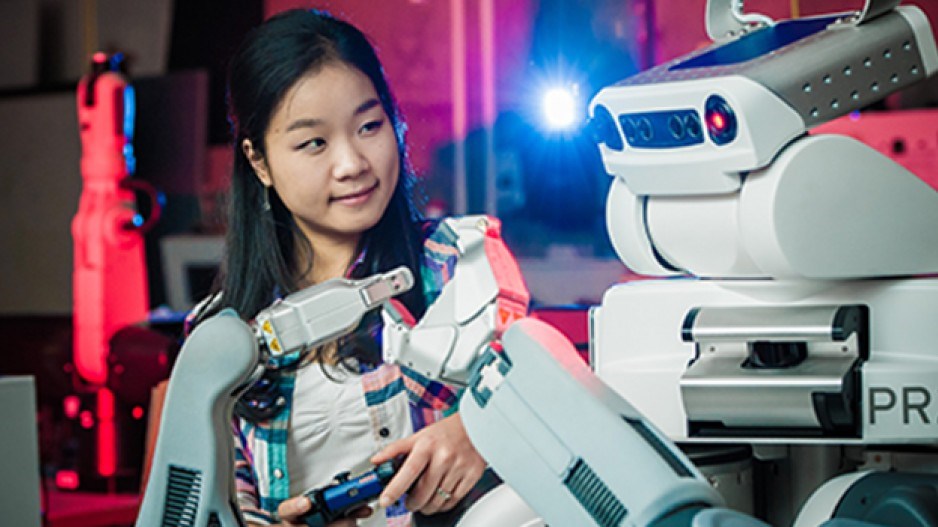

UBC PhD student AJung Moon, who co-authored a study honoured as best paper at last month's IEEE International Conference on Human-Robot Interaction, said her team's research makes the automatons much more viable for household chores or helping paraplegics.

"It's actually letting us know how little, subtle things like gestures (and) gaze cues can actually make an impact on how conveniently we can actually interact with a robot," she said, "especially when it's doing something very, very trivial, like handovers, which is something that people know how to do and robots should know how to do, but it's a very big technical challenge in robotics."

Moon and her fellow researchers took a look at how humans moved their heads, necks and eyes when they hand over objects to each other.

They then programmed the robot to recognize non-verbal cues, such as the gaze of the eyes, to make exchanging objects between humans and machines a much smoother operation.

Before this, Moon said the results of passing a cup of coffee could be a little messy if the robot would release its grip only when the human used very specific motions, like pulling the cup upward.

Despite this breakthrough in human-to-robot interaction, she admitted it's still likely decades away before household robots will be an everyday presence.

Moon said the most challenging aspect of introducing robots into daily use is the cost.